Use new algorithm to run the batch processing¶

Create the calculator¶

All the data processing is done by the Calculator. The input data

and output data are stored in its attributes.

from crystalmapping.utils import Calculator

calculator = Calculator()

Load the experiment Data¶

In this example, we load the data from a database. You can also use your

data source as long as there is an DataArray of the exposure images.

from databroker import catalog

list(catalog)

['test_data_in_database',

'analysis',

'bt_safN_306132',

'pdf',

'saf_307381',

'xpd']

db = catalog["xpd"]

UID = '257b5581-ca78-4309-9c50-b4d65d80152a'

run = db[UID]

run

BlueskyRun

uid='257b5581-ca78-4309-9c50-b4d65d80152a'

exit_status='success'

2021-03-19 22:48:19.253 -- 2021-03-19 23:13:41.753

Streams:

* primary

data = run.primary.to_dask()

data

<xarray.Dataset>

Dimensions: (dim_0: 1, dim_1: 3888, dim_2: 3072, time: 1001)

Coordinates:

* time (time) float64 1.616e+09 1.616e+09 ... 1.616e+09

Dimensions without coordinates: dim_0, dim_1, dim_2

Data variables:

dexela_stats1_total (time) float64 dask.array<chunksize=(1,), meta=np.ndarray>

dexela_image (time, dim_0, dim_1, dim_2) float64 dask.array<chunksize=(1, 1, 3888, 3072), meta=np.ndarray>

mPhi (time) float64 dask.array<chunksize=(1,), meta=np.ndarray>

mPhi_user_setpoint (time) float64 dask.array<chunksize=(1,), meta=np.ndarray>- dim_0: 1

- dim_1: 3888

- dim_2: 3072

- time: 1001

- time(time)float641.616e+09 1.616e+09 ... 1.616e+09

array([1.616209e+09, 1.616209e+09, 1.616209e+09, ..., 1.616210e+09, 1.616210e+09, 1.616210e+09])

- dexela_stats1_total(time)float64dask.array<chunksize=(1,), meta=np.ndarray>

- object :

- dexela

Array Chunk Bytes 7.82 kiB 8 B Shape (1001,) (1,) Count 2002 Tasks 1001 Chunks Type float64 numpy.ndarray - dexela_image(time, dim_0, dim_1, dim_2)float64dask.array<chunksize=(1, 1, 3888, 3072), meta=np.ndarray>

- object :

- dexela

Array Chunk Bytes 89.08 GiB 91.12 MiB Shape (1001, 1, 3888, 3072) (1, 1, 3888, 3072) Count 3003 Tasks 1001 Chunks Type float64 numpy.ndarray - mPhi(time)float64dask.array<chunksize=(1,), meta=np.ndarray>

- object :

- mPhi

Array Chunk Bytes 7.82 kiB 8 B Shape (1001,) (1,) Count 2002 Tasks 1001 Chunks Type float64 numpy.ndarray - mPhi_user_setpoint(time)float64dask.array<chunksize=(1,), meta=np.ndarray>

- object :

- mPhi

Array Chunk Bytes 7.82 kiB 8 B Shape (1001,) (1,) Count 2002 Tasks 1001 Chunks Type float64 numpy.ndarray

Here, we give the data to the attribute.

calculator.frames_arr = data["dexela_image"][::10]

calculator.frames_arr

<xarray.DataArray 'dexela_image' (time: 101, dim_0: 1, dim_1: 3888, dim_2: 3072)>

dask.array<getitem, shape=(101, 1, 3888, 3072), dtype=float64, chunksize=(1, 1, 3888, 3072), chunktype=numpy.ndarray>

Coordinates:

* time (time) float64 1.616e+09 1.616e+09 ... 1.616e+09 1.616e+09

Dimensions without coordinates: dim_0, dim_1, dim_2

Attributes:

object: dexela- time: 101

- dim_0: 1

- dim_1: 3888

- dim_2: 3072

- dask.array<chunksize=(1, 1, 3888, 3072), meta=np.ndarray>

Array Chunk Bytes 8.99 GiB 91.12 MiB Shape (101, 1, 3888, 3072) (1, 1, 3888, 3072) Count 3104 Tasks 101 Chunks Type float64 numpy.ndarray - time(time)float641.616e+09 1.616e+09 ... 1.616e+09

array([1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616209e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09, 1.616210e+09])

- object :

- dexela

We also need the metadata of the grid scan, especially the shape of

the grid. If not provided, the calculation can still be done but the

coordinates of the grain map is unknown.

# show the metadata

metadata = dict(run.metadata["start"])

# Because I terminate the data. I nedd to update the metadata.

metadata["shape"] = [101]

metadata["extents"] = ([-0.5, 0.499],)

calculator.metadata = metadata

calculator.metadata

{'time': 1616208499.2537348,

'uid': '257b5581-ca78-4309-9c50-b4d65d80152a',

'versions': {'ophyd': '1.3.3', 'bluesky': '1.6.7'},

'scan_id': 45,

'proposal_id': '307690',

'plan_type': 'generator',

'plan_name': 'rel_grid_scan',

'detectors': ['dexela'],

'motors': ['mPhi'],

'num_points': 1001,

'num_intervals': 1000,

'plan_args': {'detectors': ["XPDDDexelaDetector(prefix='XF:28IDD-ES:2{Det:DEX}', name='dexela', read_attrs=['stats1', 'stats1.total', 'tiff'], configuration_attrs=['cam', 'cam.acquire_period', 'cam.acquire_time', 'cam.image_mode', 'cam.trigger_mode', 'stats1', 'stats1.configuration_names', 'stats1.port_name', 'stats1.asyn_pipeline_config', 'stats1.blocking_callbacks', 'stats1.enable', 'stats1.nd_array_port', 'stats1.plugin_type', 'stats1.bgd_width', 'stats1.centroid_threshold', 'stats1.compute_centroid', 'stats1.compute_histogram', 'stats1.compute_profiles', 'stats1.compute_statistics', 'stats1.hist_max', 'stats1.hist_min', 'stats1.hist_size', 'stats1.profile_cursor', 'stats1.profile_size', 'stats1.ts_num_points', 'tiff', 'detector_type'])"],

'args': ["EpicsMotor(prefix='XF:28IDD-ES:2{Stg:Stack-Ax:Phi}Mtr', name='mPhi', settle_time=0.0, timeout=None, read_attrs=['user_readback', 'user_setpoint'], configuration_attrs=['user_offset', 'user_offset_dir', 'velocity', 'acceleration', 'motor_egu'])",

-0.5,

0.5,

1001],

'per_step': 'None'},

'hints': {'gridding': 'rectilinear', 'dimensions': [[['mPhi'], 'primary']]},

'shape': [101],

'extents': ([-0.5, 0.499],),

'snaking': [False],

'plan_pattern': 'outer_product',

'plan_pattern_args': {'args': ["EpicsMotor(prefix='XF:28IDD-ES:2{Stg:Stack-Ax:Phi}Mtr', name='mPhi', settle_time=0.0, timeout=None, read_attrs=['user_readback', 'user_setpoint'], configuration_attrs=['user_offset', 'user_offset_dir', 'velocity', 'acceleration', 'motor_egu'])",

-0.5,

0.5,

1001]},

'plan_pattern_module': 'bluesky.plan_patterns',

'task': 'a single point rocking curve',

'sample': 'PARADIM-2',

'beam': 'slit'}

We can also apply the geometry of the experiment to let the calculator calculate the Q value of the peaks. This is optional.

from pyFAI.azimuthalIntegrator import AzimuthalIntegrator

calculator.ai = AzimuthalIntegrator(dist=200, wavelength=0.186, detector="dexela2923", poni1=1536, poni2=1944)

Process the data¶

The simplest way to use the calculator is to use the auto_process.

It takes three necessary parameters. You will find the meaning of them

in the docs.

help(calculator.auto_process)

Help on method auto_process in module crystalmapping.utils:

auto_process(num_wins: int, hw_wins: int, diameter: int, index_filter: slice = None, *args, **kwargs) -> None method of crystalmapping.utils.Calculator instance

Automatically process the data in the standard protocol.

Parameters

----------

num_wins : int

The number of windows.

hw_wins : int

The half width of the windows in pixels.

diameter : int

The diameter of the kernel to use in peak finding in pixels. It must be an odd integer.

index_filter : slice

The index slice of the data to use in the calculation of the dark and light image.

args :

The position arguments of the peak finding function trackpy.locate.

kwargs :

The keyword arguments of the peak finding function trackpy.locate.

Returns

-------

None. The calculation results are saved in attributes.

Here we process the data. The new algorithm is a two-run-through algorithm so there are two status bars. First one show the status of the calculation of light and dark image and the second one shows the status of the calculation of the crystal maps.

calculator.auto_process(num_wins=4, hw_wins=25, diameter=41)

100%|██████████| 100/100 [00:37<00:00, 2.65it/s]

100%|██████████| 101/101 [00:28<00:00, 3.53it/s]

Visualize the data¶

All the final, intermediate and raw data can be visualized. The methods to visualize them starts with “show”. Here, we show two examples.

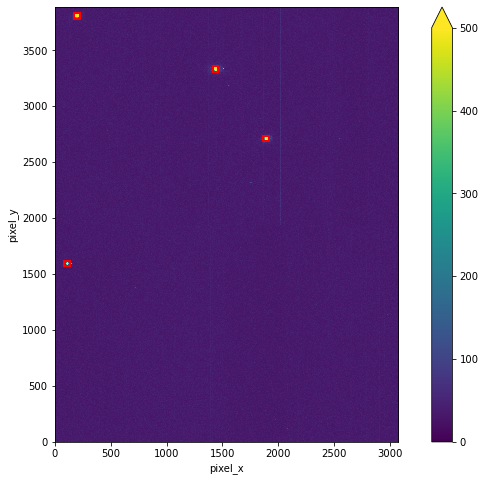

Here, we show the windows on the dark subtracted light image.

calculator.show_windows(vmax=500, size=8);

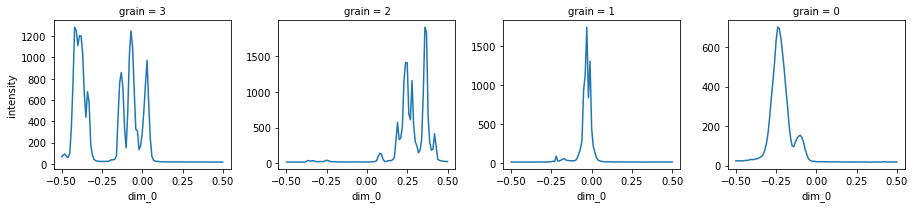

Then, we show the final rocking curves plot, where are the one dimensional crystal maps.

calculator.show_intensity();

Save the data¶

The data can be converted to DataSet and you can save it in multiple

formats.

ds = calculator.to_dataset()

ds

<xarray.Dataset>

Dimensions: (dim_0: 101, grain: 4, pixel_x: 3072, pixel_y: 3888)

Coordinates:

* grain (grain) int64 3 2 1 0

* dim_0 (dim_0) float64 -0.5 -0.49 -0.48 -0.47 ... 0.479 0.489 0.499

Dimensions without coordinates: pixel_x, pixel_y

Data variables:

dark (pixel_y, pixel_x) float64 300.0 303.0 300.0 ... 297.0 311.0

light (pixel_y, pixel_x) float64 339.0 339.0 336.0 ... 332.0 341.0

intensity (grain, dim_0) float64 65.69 83.61 92.62 ... 17.92 18.29 17.96

y (grain) int64 3809 3334 2712 1595

dy (grain) int64 25 25 25 25

x (grain) int64 200 1437 1890 109

dx (grain) int64 25 25 25 25

Q (grain) float64 4.38e-08 4.38e-08 4.38e-08 4.38e-08

Attributes: (12/22)

time: 1616208499.2537348

uid: 257b5581-ca78-4309-9c50-b4d65d80152a

versions: {'ophyd': '1.3.3', 'bluesky': '1.6.7'}

scan_id: 45

proposal_id: 307690

plan_type: generator

... ...

plan_pattern: outer_product

plan_pattern_args: {'args': ["EpicsMotor(prefix='XF:28IDD-ES:2{Stg:Sta...

plan_pattern_module: bluesky.plan_patterns

task: a single point rocking curve

sample: PARADIM-2

beam: slit- dim_0: 101

- grain: 4

- pixel_x: 3072

- pixel_y: 3888

- grain(grain)int643 2 1 0

array([3, 2, 1, 0])

- dim_0(dim_0)float64-0.5 -0.49 -0.48 ... 0.489 0.499

array([-5.0000e-01, -4.9001e-01, -4.8002e-01, -4.7003e-01, -4.6004e-01, -4.5005e-01, -4.4006e-01, -4.3007e-01, -4.2008e-01, -4.1009e-01, -4.0010e-01, -3.9011e-01, -3.8012e-01, -3.7013e-01, -3.6014e-01, -3.5015e-01, -3.4016e-01, -3.3017e-01, -3.2018e-01, -3.1019e-01, -3.0020e-01, -2.9021e-01, -2.8022e-01, -2.7023e-01, -2.6024e-01, -2.5025e-01, -2.4026e-01, -2.3027e-01, -2.2028e-01, -2.1029e-01, -2.0030e-01, -1.9031e-01, -1.8032e-01, -1.7033e-01, -1.6034e-01, -1.5035e-01, -1.4036e-01, -1.3037e-01, -1.2038e-01, -1.1039e-01, -1.0040e-01, -9.0410e-02, -8.0420e-02, -7.0430e-02, -6.0440e-02, -5.0450e-02, -4.0460e-02, -3.0470e-02, -2.0480e-02, -1.0490e-02, -5.0000e-04, 9.4900e-03, 1.9480e-02, 2.9470e-02, 3.9460e-02, 4.9450e-02, 5.9440e-02, 6.9430e-02, 7.9420e-02, 8.9410e-02, 9.9400e-02, 1.0939e-01, 1.1938e-01, 1.2937e-01, 1.3936e-01, 1.4935e-01, 1.5934e-01, 1.6933e-01, 1.7932e-01, 1.8931e-01, 1.9930e-01, 2.0929e-01, 2.1928e-01, 2.2927e-01, 2.3926e-01, 2.4925e-01, 2.5924e-01, 2.6923e-01, 2.7922e-01, 2.8921e-01, 2.9920e-01, 3.0919e-01, 3.1918e-01, 3.2917e-01, 3.3916e-01, 3.4915e-01, 3.5914e-01, 3.6913e-01, 3.7912e-01, 3.8911e-01, 3.9910e-01, 4.0909e-01, 4.1908e-01, 4.2907e-01, 4.3906e-01, 4.4905e-01, 4.5904e-01, 4.6903e-01, 4.7902e-01, 4.8901e-01, 4.9900e-01])

- dark(pixel_y, pixel_x)float64300.0 303.0 300.0 ... 297.0 311.0

array([[300., 303., 300., ..., 332., 334., 336.], [299., 306., 298., ..., 319., 327., 321.], [294., 310., 286., ..., 328., 321., 328.], ..., [335., 326., 330., ..., 294., 289., 308.], [329., 329., 322., ..., 307., 284., 310.], [331., 335., 320., ..., 305., 297., 311.]]) - light(pixel_y, pixel_x)float64339.0 339.0 336.0 ... 332.0 341.0

array([[339., 339., 336., ..., 365., 371., 371.], [331., 338., 327., ..., 349., 359., 367.], [330., 351., 323., ..., 358., 359., 363.], ..., [369., 371., 362., ..., 327., 329., 351.], [365., 371., 362., ..., 337., 321., 344.], [374., 373., 375., ..., 335., 332., 341.]]) - intensity(grain, dim_0)float6465.69 83.61 92.62 ... 18.29 17.96

array([[ 65.68896578, 83.61399462, 92.62322184, 67.08227605, 60.94579008, 99.78392926, 349.04344483, 767.14340638, 1284.76970396, 1253.59400231, 1111.81545559, 1205.10111496, 1202.78008458, 1036.17301038, 665.57362553, 438.7135717 , 679.0911188 , 576.35601692, 182.34871203, 84.47327951, 43.06305267, 31.28604383, 26.37408689, 24.64167628, 22.94925029, 23.18762015, 22.63360246, 23.4840446 , 24.13533256, 24.14186851, 32.94925029, 39.15955402, 38.71818531, 42.97270281, 77.62860438, 449.23298731, 766.34563629, 859.0626682 , 711.88735102, 337.99692426, 150.49250288, 484.59515571, 1015.0615148 , 1249.66474433, 1098.24221453, 701.61322568, 326.30757401, 310.70357555, 133.43137255, 170.757401 , 290.03537101, 495.9077278 , 746.0142253 , 973.31026528, 578.04652057, 239.19146482, 70.95693964, 34.69319493, 26.58246828, 24.19184929, 22.60899654, 20.38754325, 19.6189927 , 18.87043445, 19.25297962, 18.46905037, 18.70319108, 18.17916186, 18.35217224, 18.03152634, 18.03498654, 17.787005 , 18.8781238 , 18.25105729, 18.22376009, 18.29950019, 16.66051519, 17.72126105, 18.19607843, 18.58362168, ... 518.91349481, 633.96693579, 701.76701269, 694.54402153, 643.96386005, 568.69204152, 490.14455978, 395.6070742 , 307.99653979, 210.47635525, 140.35870819, 98.9561707 , 95.44675125, 120.39715494, 137.95693964, 148.76662822, 153.4709727 , 143.23760092, 119.76816609, 82.44290657, 57.46482122, 35.90234525, 26.97962322, 22.91926182, 21.73625529, 21.08150711, 20.05574779, 18.49211842, 20.6343714 , 18.73394848, 19.816609 , 19.58400615, 19.14417532, 19.26412918, 18.90811226, 18.56132257, 18.21107266, 18.9869281 , 18.89734717, 18.11649366, 19.28296809, 18.41560938, 17.52210688, 18.27681661, 18.25874664, 17.79123414, 17.75816993, 19.06113033, 17.56978085, 18.28527489, 18.04575163, 18.59900038, 17.58900423, 18.24221453, 18.05997693, 18.56247597, 17.8023837 , 18.63321799, 17.58708189, 17.77316417, 17.40522876, 18.56978085, 17.36793541, 18.02191465, 17.81814687, 18.16532103, 17.83660131, 18.31218762, 19.31987697, 19.13302576, 18.09611688, 18.34717416, 18.41830065, 18.3775471 , 17.91926182, 18.29065744, 17.95809304]]) - y(grain)int643809 3334 2712 1595

array([3809, 3334, 2712, 1595])

- dy(grain)int6425 25 25 25

array([25, 25, 25, 25])

- x(grain)int64200 1437 1890 109

array([ 200, 1437, 1890, 109])

- dx(grain)int6425 25 25 25

array([25, 25, 25, 25])

- Q(grain)float644.38e-08 4.38e-08 4.38e-08 4.38e-08

array([4.38002971e-08, 4.38002488e-08, 4.38002742e-08, 4.38005168e-08])

- time :

- 1616208499.2537348

- uid :

- 257b5581-ca78-4309-9c50-b4d65d80152a

- versions :

- {'ophyd': '1.3.3', 'bluesky': '1.6.7'}

- scan_id :

- 45

- proposal_id :

- 307690

- plan_type :

- generator

- plan_name :

- rel_grid_scan

- detectors :

- ['dexela']

- motors :

- ['mPhi']

- num_points :

- 1001

- num_intervals :

- 1000

- plan_args :

- {'detectors': ["XPDDDexelaDetector(prefix='XF:28IDD-ES:2{Det:DEX}', name='dexela', read_attrs=['stats1', 'stats1.total', 'tiff'], configuration_attrs=['cam', 'cam.acquire_period', 'cam.acquire_time', 'cam.image_mode', 'cam.trigger_mode', 'stats1', 'stats1.configuration_names', 'stats1.port_name', 'stats1.asyn_pipeline_config', 'stats1.blocking_callbacks', 'stats1.enable', 'stats1.nd_array_port', 'stats1.plugin_type', 'stats1.bgd_width', 'stats1.centroid_threshold', 'stats1.compute_centroid', 'stats1.compute_histogram', 'stats1.compute_profiles', 'stats1.compute_statistics', 'stats1.hist_max', 'stats1.hist_min', 'stats1.hist_size', 'stats1.profile_cursor', 'stats1.profile_size', 'stats1.ts_num_points', 'tiff', 'detector_type'])"], 'args': ["EpicsMotor(prefix='XF:28IDD-ES:2{Stg:Stack-Ax:Phi}Mtr', name='mPhi', settle_time=0.0, timeout=None, read_attrs=['user_readback', 'user_setpoint'], configuration_attrs=['user_offset', 'user_offset_dir', 'velocity', 'acceleration', 'motor_egu'])", -0.5, 0.5, 1001], 'per_step': 'None'}

- hints :

- {'gridding': 'rectilinear', 'dimensions': [[['mPhi'], 'primary']]}

- shape :

- [101]

- extents :

- [[-0.5, 0.499]]

- snaking :

- [False]

- plan_pattern :

- outer_product

- plan_pattern_args :

- {'args': ["EpicsMotor(prefix='XF:28IDD-ES:2{Stg:Stack-Ax:Phi}Mtr', name='mPhi', settle_time=0.0, timeout=None, read_attrs=['user_readback', 'user_setpoint'], configuration_attrs=['user_offset', 'user_offset_dir', 'velocity', 'acceleration', 'motor_egu'])", -0.5, 0.5, 1001]}

- plan_pattern_module :

- bluesky.plan_patterns

- task :

- a single point rocking curve

- sample :

- PARADIM-2

- beam :

- slit

Here, we save it in NetCDF format. Before it is saved, the attrs

need to be cleaned.

ds.attrs = {}

ds.to_netcdf("data/example.nc")

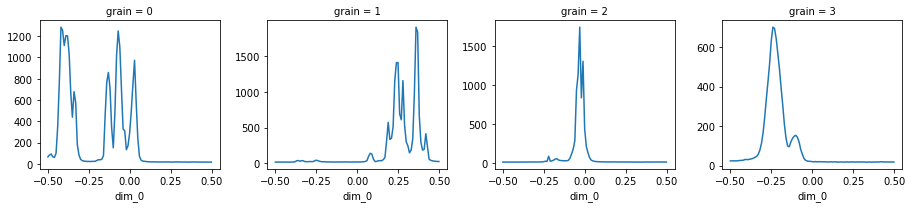

Load the dataset and visualize it¶

The data can be loaded and visualized again after the data processing session is over.

import xarray as xr

ds = xr.load_dataset("data/example.nc")

calculator.auto_visualize(ds);